# Privacy Preserving Agent-to-Agent Collaboration for Google's A2A Ecosystem

:::success

:lock: Enabling secure, compliant, and scalable multi-agent workflows with advanced cryptography.

**By [Silence Laboratories](https://www.silencelaboratories.com/)**

:::

## Executive Summary

<!--As enterprises increasingly rely on autonomous AI agents to automate complex workflows, cross-organizational agent collaboration has become essential for competitive advantage. [Google's Agent-to-Agent (A2A) protocol]((https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/)) is at the forefront of this transformation, enabling agents from diverse organizations to negotiate, transact, and deliver value without human intervention.

However, this new era of cross-company agentic collaboration presents a critical challenge: organizations need the benefits of contextual data sharing and multi-agent synergies while maintaining rigorous control over privacy, intellectual property, and regulatory compliance. Moreover, evaluating agents in isolation does not adequately reflect their real-world effectiveness, as *multi-agent systems inherently depend on their collective interactions to deliver intended outcomes.* Traditional data protection methods fail in environments where agents rapidly interconnect, make autonomous decisions, and process sensitive information in real time.

Silence Laboratories addresses this challenge with the Agent-to-Agent Cryptographic Computing Virtual Machine (A2A-CCVM)—a groundbreaking framework that integrates advanced cryptographic computing directly into the A2A protocol. A2A-CCVM empowers AI agents to perform joint computations on private data, ensuring that sensitive information remains mathematically protected throughout the collaborative process. This enables fully interoperable workflows where data confidentiality becomes a feature, not a trade-off.

-->

<!--:::info

:bulb: Agents are designed to collaborate.

:::

:::danger

However, autonomous agent collaboration introduces new security and compliance challenges. When AI agents make decisions and exchange data without human oversight, organizations need robust mechanisms to ensure data protection and regulatory compliance. Cryptographic computing techniques that operate on encrypted data provide a technical solution to these governance challenges.

:::

This white paper introduces [Silence Laboratories'](https://silencelaboratories.com/) innovative solution—the Agent-to-Agent Cryptographic Computing Virtual Machine (A2A-CCVM)—a robust framework integrating advanced cryptographic computing directly into the A2A protocol. This secure **multi-party computation framework** allows AI agents to perform **joint computations on sensitive information**, ensuring data remains mathematically protected throughout collaboration.

By providing mathematical guarantees of data confidentiality, the A2A-CCVM addresses privacy and trust concerns head-on, unlocking unprecedented efficiencies in secure data enrichment, private model evaluation, and other high-value collaborative scenarios. Our approach transforms what is currently the primary barrier to widespread A2A adoption—data privacy—into a foundational strength, enabling organizations to fully leverage cross-company agent workflows securely and effectively.

-->

As enterprises increasingly rely on autonomous AI agents to automate complex workflows, cross-organizational agent collaboration has become essential for competitive advantage. However, this new era of cross-company agentic collaboration presents a critical challenge: organizations need the benefits of contextual data sharing and multi-agent synergies while maintaining rigorous control over privacy, intellectual property, and regulatory compliance. Moreover, evaluating agents in isolation does not adequately reflect their real-world effectiveness, *as multi-agent systems inherently depend on their collective interactions to deliver intended outcomes*. Traditional data protection methods fail in environments where agents rapidly interconnect, make autonomous decisions, and process sensitive information in real time.

Enterprise surveys indicate that privacy concerns prevent significant portions of enterprise data from participating in cross-company workflows. Organizations with the most valuable data—financial institutions, healthcare systems, and industrial manufacturers—face regulatory constraints, intellectual property protection requirements, and customer data sovereignty obligations that effectively bar them from A2A collaboration despite clear business value.

Silence Laboratories addresses this adoption barrier with the Agent-to-Agent Cryptographic Computing Virtual Machine (A2A-CCVM)—a framework that integrates advanced cryptographic computing directly into the A2A protocol. A2A-CCVM empowers AI agents to perform joint computations on private data, ensuring that sensitive information remains mathematically protected throughout the collaborative process. This enables fully interoperable workflows where data confidentiality becomes a feature, not a trade-off.

By providing mathematical guarantees of data confidentiality, the A2A-CCVM addresses privacy and trust concerns head-on, unlocking efficiencies in secure data enrichment, private model evaluation, and other high-value collaborative scenarios. Our approach transforms what is currently a primary barrier to widespread A2A adoption—data privacy—into a foundational strength, enabling organizations to leverage cross-company agent workflows securely and effectively.

## Key Capabilities and Business Value

* **Business acceleration:** Removes data privacy as the primary barrier to A2A adoption, enabling organizations to safely automate and scale agent-powered processes across organizational boundaries

* **Programmable privacy:** Secure Multi-Party Computation (MPC), Homomorphic Encryption, and Zero-Knowledge Proofs allow agents to share computational results without exposing raw data or proprietary models

* **Industry-agnostic design**: Supports high-value scenarios across finance, healthcare, and supply chain—from private set intersection to secure supplier evaluation—without revealing underlying assets

* **Regulatory readiness:** Built-in mathematical assurances and verifiable consent mechanisms provide robust compliance and accountability frameworks

* **Proven cryptographic foundation:** Built on Silence Laboratories' production-tested MPC infrastructure, including sub-20ms threshold signature schemes currently deployed in digital asset applications

With A2A-CCVM, organizations can unlock the potential of agentic automation while maintaining rigorous control over their most sensitive assets.

## Introduction

This whitepaper presents Silence Laboratories' vision for privacy-preserving agent collaboration and outlines strategic partnership opportunities within Google's A2A ecosystem. We examine the technical architecture, key use cases, and business value of cryptographic computing in agent workflows—demonstrating how organizations can accelerate A2A adoption while maintaining mathematical guarantees of data confidentiality.

The document addresses three critical questions for enterprise leaders and technology partners: How can organizations capture the value of cross-company agent collaboration without compromising sensitive data? What technical capabilities are required to enable privacy-preserving multi-agent workflows at scale? And how can strategic partnerships accelerate the deployment of secure agent ecosystems across industries?

Our analysis reveals that while Google's A2A protocol provides the foundational infrastructure for agent interoperability, privacy concerns remain the primary barrier to widespread enterprise adoption. By layering cryptographic computing capabilities onto the A2A protocol, we can transform this barrier into a competitive advantage—enabling new classes of secure, automated workflows that were previously impossible due to data sharing constraints.

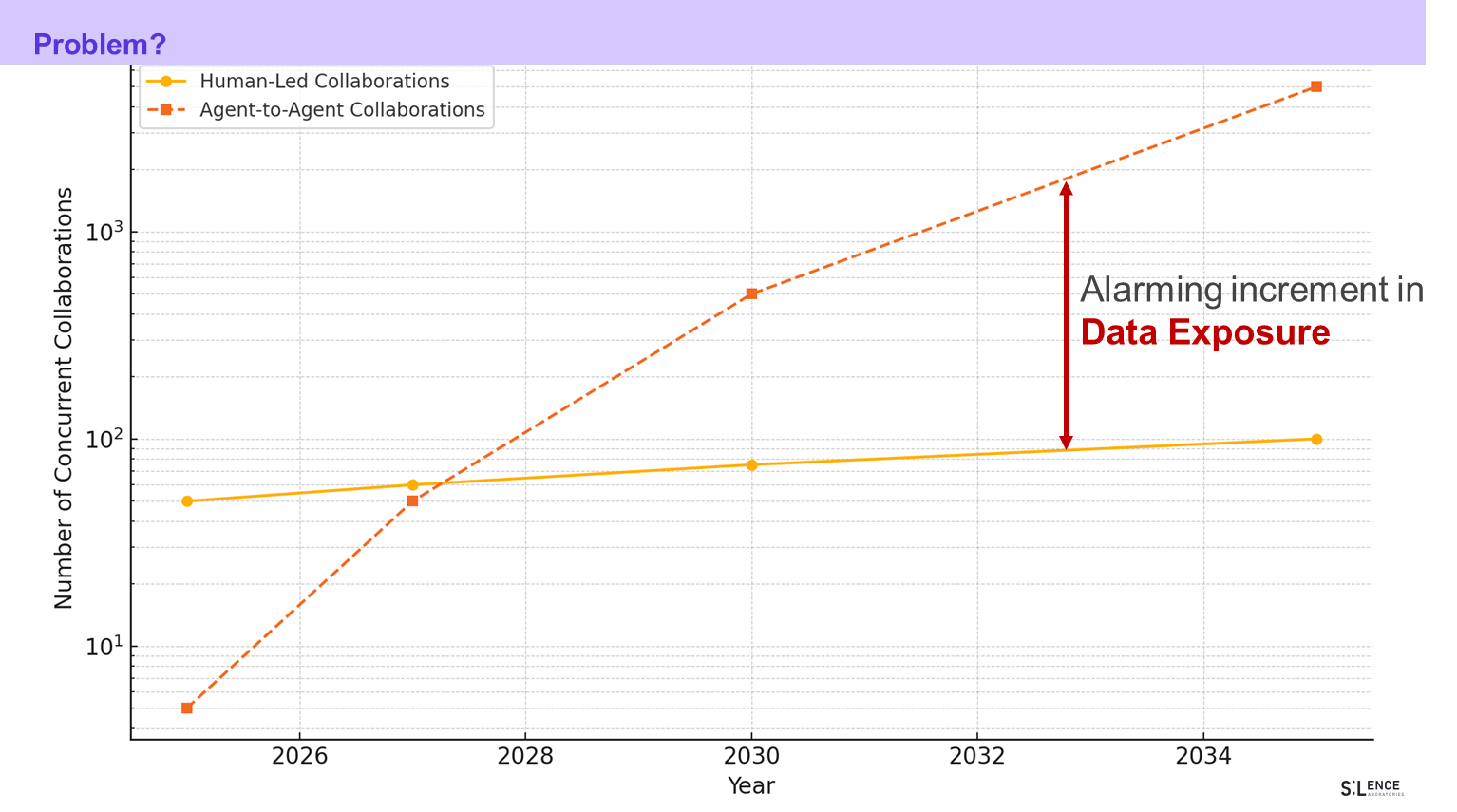

## A. Growth in A2A based collaborations

A surge of cross‑company A2A traffic is coming. [Google’s A2A launch](https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/) notes that enterprises are already wiring agents “across their entire application estates,” and more than 50 major vendors have committed to interoperable agents so that tasks can hop between organisations and clouds without manual glue code. Analysts echo the scale‑up: Gartner projects the agentic‑AI tools market will surge from ≈[$6.7 billion in 2024 to $10.4 billion](https://superagi.com/top-5-agentic-ai-trends-in-2025-from-multi-agent-collaboration-to-self-healing-systems/) in 2025 (56 % CAGR) as multi‑agent collaboration becomes mainstream in customer service, finance and healthcare.

:::success

:link: Agent Protocols unlock 1000s of Cross-Company links—instantly.

:::

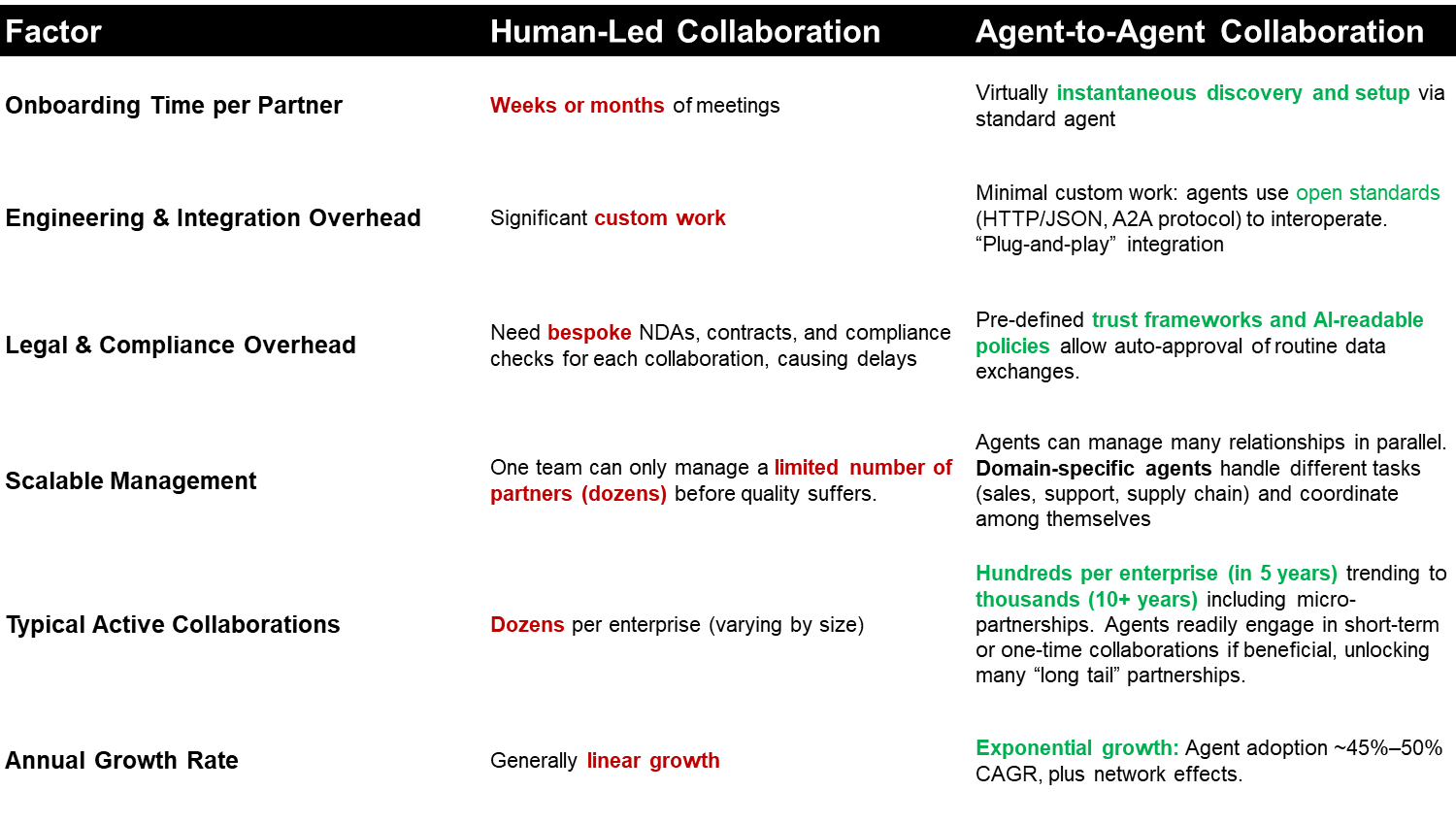

### A.1 Reasoning of such growth?

Open‑standard handshakes such as Google’s **Agent‑to‑Agent (A2A) protocol** already ship with backing from **50+ cloud, SaaS and SI partners—Salesforce, SAP, ServiceNow, Deloitte, Infosys, etc.**—meaning every new product release can arrive “agent‑ready” out‑of‑the‑box. ([Google Developers Blog][1]) Unlike earlier API ecosystems that required bespoke glue code, A2A exposes a universal JSON‑RPC and streaming model that any LLM‑driven tool can call in a single line, collapsing integration time from weeks to minutes.

The drivers are combinatorial: each enterprise may run hundreds of internal agents, but with A2A every one of those can now talk to hundreds of partner agents—an **n × m explosion** that dwarfs the linear growth of human‑led APIs. Cost dynamics reinforce the curve: an A2A hand‑off (fractions of a cent) is orders‑of‑magnitude cheaper than a human escrow or bespoke integration. Finally, competitive pressure pushes firms to expose capabilities quickly; those that resist agent interoperability risk being bypassed by ecosystems that can self‑compose services in real time. Together these factors create a flywheel in which **open protocol + vendor mass‑adoption + combinatorial connectivity + cost advantage** make A2A growth steep, fast, and inevitable—unless privacy concerns become the brake.

:::success

#### Why cross‑company A2A will outpace human‑led collaboration

1. **Setup time collapses** – Agents discover each other via open cards (A2A spec §4) and begin work in seconds; humans still negotiate NDAs and SOWs for weeks.

2. **Zero‑marginal integration cost** – One JSON‑RPC+streaming standard replaces bespoke API stitching; every new partner is “plug‑and‑play.”

3. **AI‑readable trust policies** – Machine‑verifiable consent and access rules replace manual legal reviews, letting thousands of pairs spin up safely.

4. **Combinatorial networks** – If each firm runs *n* internal agents and connects to *m* partner agents, potential pairs scale at *n × m*— orders‑of‑magnitude more than human partnerships capped in the dozens.

5. **Continuous optimisation** – Agents monitor outcomes and re‑wire collaborations autonomously; humans iterate quarterly at best.

6. **Lower risk appetite barrier** – With cryptographic privacy patches (MPC/CCVM), firms can share value without sharing raw data, removing the biggest blocker to rapid cross‑boundary growth.

:::

[1]: https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/?utm_source=chatgpt.com "Announcing the Agent2Agent Protocol (A2A)"

## B. Why A2A needs Privacy‑preserving collaboration?

The power of agent‑to‑agent (A2A) workflows comes from rich, machine‑speed context exchange—yet that same context is often packed with prompts, embeddings, and proprietary data that neither side can afford to disclose. Imagine a fraud‑detection agent at Bank A that holds a proprietary risk model and a counterpart at Bank B that owns fresh transaction streams: to spot a cross‑bank mule network they must run the model on Bank B’s data, but sharing either the model weights or raw transactions would violate competitive, regulatory, and customer‑privacy constraints. Similar collisions appear when a hospital’s FDA‑cleared imaging model needs genomic inputs from an external lab, or when a retailer’s demand‑elasticity engine must consume a supplier’s real‑time costs. Without a privacy layer, every A2A hand‑off is a new leakage surface—plaintext payloads travel through logs, observability pipes, and downstream agents, multiplying exposure with each hop.

### B.1 Why Traditional **Privacy** Controls Collapse in Agent‑to‑Agent Collaboration

| Legacy Privacy Method | Privacy Breakdown in A2A World |

| ----------------------------------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| **Static “least‑privilege” roles** | Agents re‑plan on the fly; fixed roles give either **data starvation** (task fails) or **over‑fetch** (private info scooped up “just in case”). |

| **One‑time user consent pop‑ups** | Agents transact 24/7 after the pop‑up; there’s **no ongoing, granular consent**, so data keeps flowing long after the user’s context changed. |

| **Silo‑based data segregation** | Agents must fuse insights across silos; once one silo grants access, data can be **re‑combined and re‑identified** downstream—silos leak through agent chains. |

| **Long‑lived API keys / OAuth scopes** | A single credential leaked via prompt‑injection opens the entire data trove; agents can **trade or embed tokens** inside prompts, invisible to legacy DLP. |

| **Manual redaction / DLP regex** | Agents generate new embeddings and summaries; sensitive data can be **encoded or paraphrased**, bypassing regex masks and making ex‑filtration hard to spot. |

| **Periodic audits & after‑the‑fact logs** | By the time humans inspect logs, the agent may have shared data with a dozen other agents; **irreversible exposure** precedes detection. |

| **Trust‑based partner contracts** | Contracts can’t stop a misaligned or hijacked agent; privacy promises rely on **code enforcement** and cryptographic proof, not paper agreements. |

:::info

**Core insight:** Traditional privacy tools assume static scopes, human‑speed actions, and clear perimeters. Autonomous agents break all three assumptions—so privacy must become **programmable, real‑time, and cryptographically enforced** to keep data safe while agents collaborate.

:::

A cryptographic computing coupling inside the A2A data path—lets agents compute jointly, return only the minimal output (fraud score, risk flag, clearing price), and prove compliance, all while keeping each party’s sensitive IP and data mathematically opaque. In short, if context is king, privacy‑preserving computation is its armor: the only way autonomous agents can collaborate at scale without turning every workflow into a security risk.

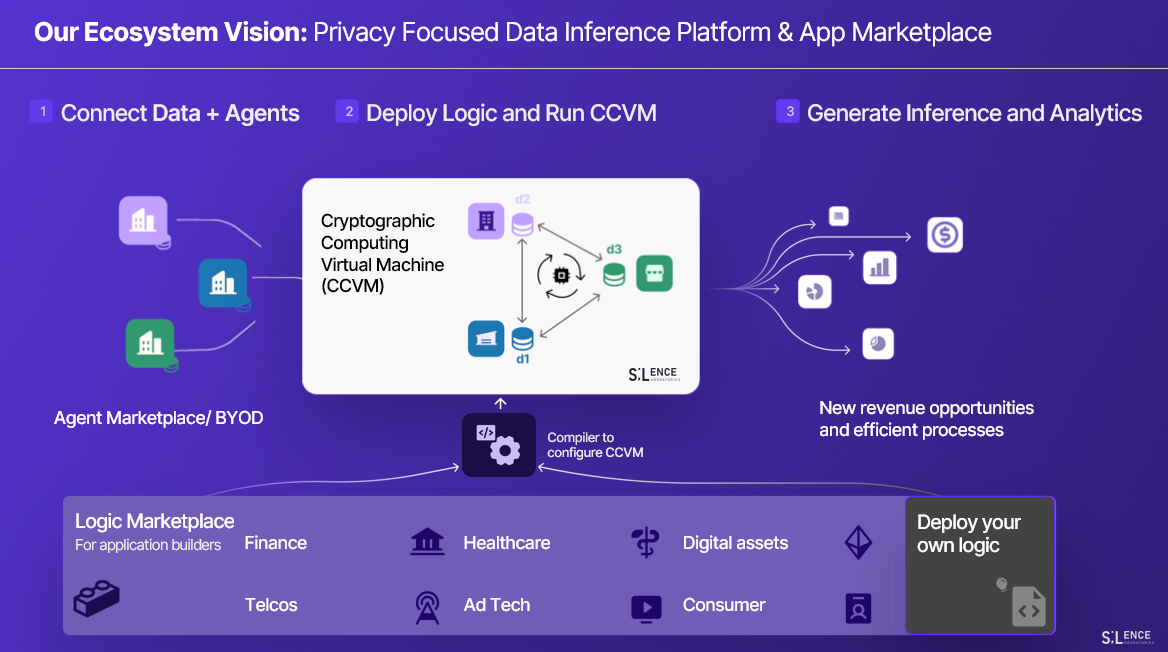

## C. About Silence Laboratories and Cryptographic Collaboration Offerings

Silence Laboratories specializes in cryptographic computing infrastructure that enables secure multi-party collaboration. Our Cryptographic Computing Virtual Machine (CCVM) allows enterprises to perform joint computations on sensitive data while maintaining mathematical privacy guarantees.

### C.1 CCVM for Secure Collaboration

::: info

:bulb: **Genesis of CCVM:** Traditional computing poses significant security risks—especially in finance, healthcare, and government—where data aggregation and transfer frequently expose sensitive information to unauthorized access, tampering, or breaches. Centralized data repositories remain vulnerable single points of failure. These risks create friction in business processes, stifle collaboration, and slow down operations due to the need for constant decryption or manual intervention.

Cryptographic computing solves these challenges by enabling computation directly on encrypted data, preserving privacy throughout the entire workflow. The Cryptographic Computing Virtual Machine (CCVM) from Silence Laboratories employs advanced techniques such as Homomorphic Encryption and Secure Multi-Party Computation. This ensures that data security is maintained without the need for decryption or centralization, accelerating decision-making, unlocking secure cross-organization collaboration, and creating new opportunities that were previously hampered by privacy or compliance concerns.

:::

#### Core Tools

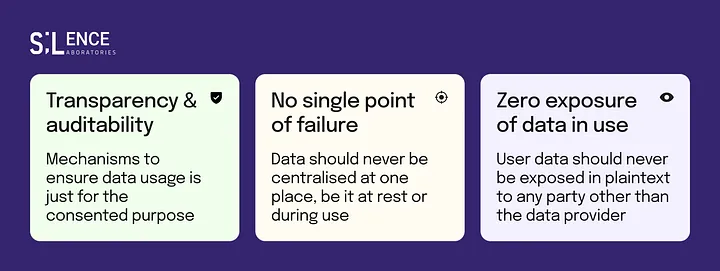

Our toolkit leverages advanced cryptographic techniques, including Multi-Party Computation (MPC), to enable secure collaboration on sensitive financial data without exposing raw inputs. It allows multiple financial institutions to plug in their private datasets and jointly compute any operation or function, receiving only the aggregate output, while keeping their individual data completely confidential. The toolkit is built keeping in mind the following design principles:

The Cryptographic Computing Virtual Machine (CCVM) combines traditional virtualization with advanced cryptographic techniques to perform computations on encrypted data without requiring decryption at any stage. Unlike conventional virtual machines, which rely on unencrypted data for computation, the CCVM processes data using robust cryptographic methods like Homomorphic Encryption (HE), Secure Multiparty Computation (SMPC), and Zero-Knowledge Proofs (ZKPs). This ensures that data remains private and secure throughout the computational process, with no need to expose raw data.

Secure Multiparty Computation (MPC) is a broad and rapidly advancing discipline, with foundational results from the 1980s seeding a plethora of works that comprise the state of the art today. From a relatively early stage, a diversity of techniques was developed to suit different deployment scenarios, such as garbled circuits for high latency settings [[Yao86](https://ieeexplore.ieee.org/document/4568207), [BMR90](https://www.cs.ucdavis.edu/~rogaway/papers/bmr90)], and secret-sharing based protocols when bandwidth is more constrained [[BGW88](https://mit6875.github.io/PAPERS/BGW.pdf), [GMW87](https://dl.acm.org/doi/10.1145/28395.28420)]. Today, the tradeoffs offered are much more fine-grained due to a spectrum of efficiency profiles, and subtle aspects such as deployment complexity and compatibility with standards. By eliminating the need for data decryption or centralization, cryptographic computing ensures that data remains private and secure throughout the computation process. This approach not only mitigates the vulnerabilities of current methods but also opens up new opportunities for secure, efficient, and compliant data collaboration across multiple parties.

The "virtual machine" concept is applicable because the CCVM abstracts the computational environment, isolating resources and ensuring secure execution. However, its key innovation lies in executing operations on encrypted data without data centralization. This decentralized approach allows data from multiple parties—such as healthcare institutions—to be securely analyzed without ever being aggregated, maintaining privacy and regulatory compliance.

In sum, the CCVM provides a secure, mathematically verifiable environment for distributed computation on encrypted data, offering advanced privacy protections and ensuring data integrity without the risks associated with traditional data movement or centralization.

## D. Our Initiatives on Privacy Preserving Secure Collaboration for A2A Protocol

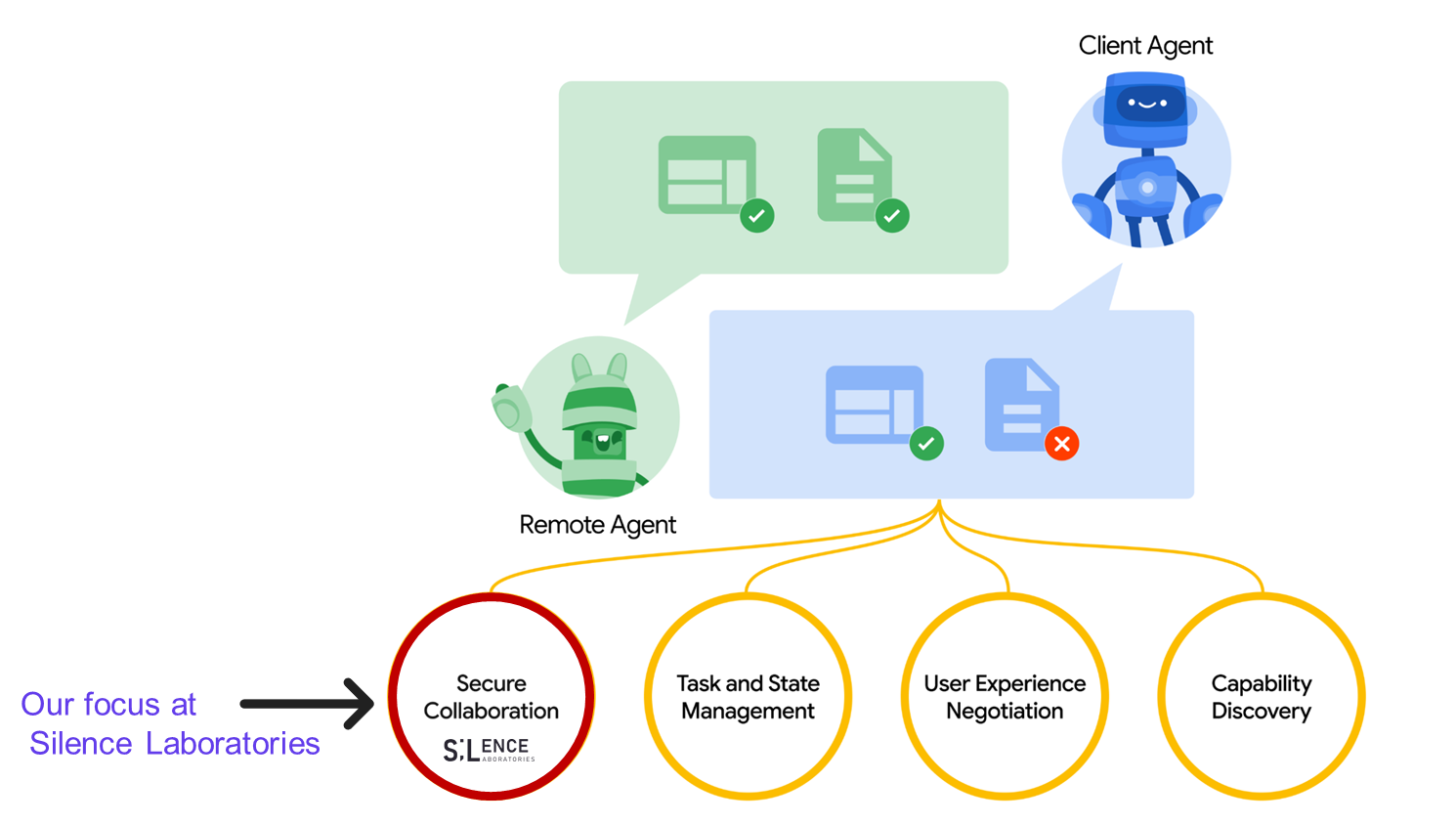

Secure collaboration between agents is poised to be an essential piece to enable wider enterprise adoption of A2A. As shown in the figure below, secure collaboration is one of the core theme of Google's A2A launch as well.

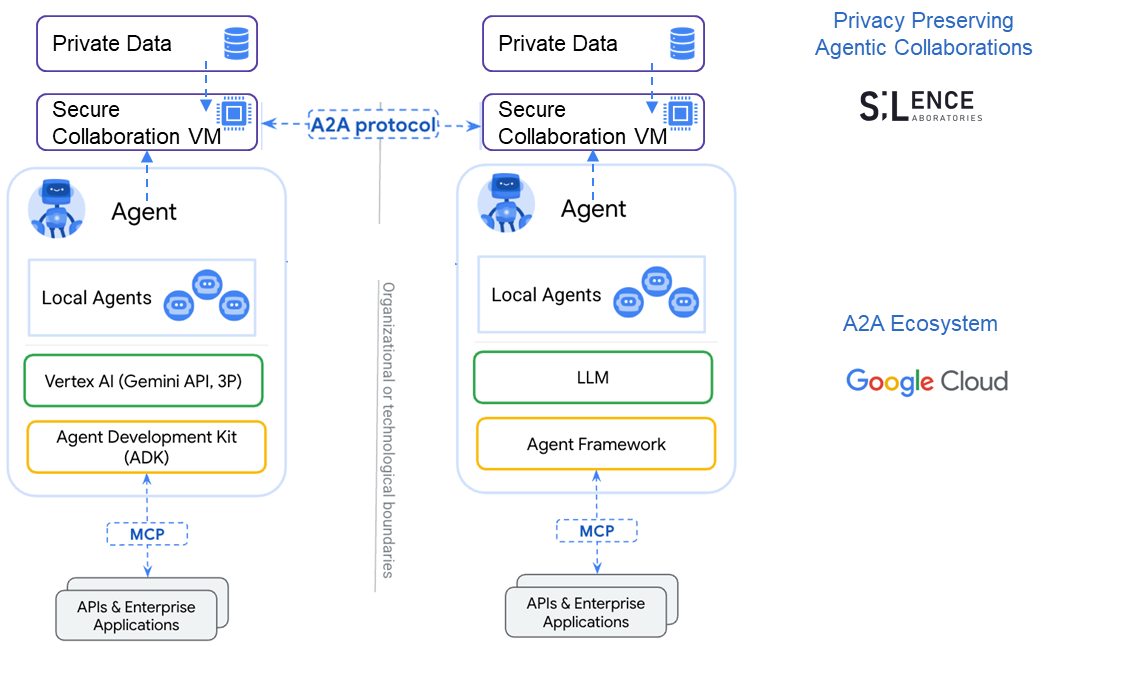

**A2A-CCVM:** We propose adding secure computation atop the A2A protocol, so two agents can compute on their respective private data without revealing it to one another. The diagram depicts two organizations whose AI agents each sit behind a **Secure Collaboration VM—our “A2A CCVM” (Cryptographic Computing VM)**—connected by Google’s A2A protocol: each agent ingests its own private data, routes it through the A2A CCVM, and collaborates with the peer CCVM to perform two‑party secure computation (an MPC variant) so they can share results and coordinate tasks while never exposing underlying prompts, embeddings, or user secrets; beneath each agent, local sub‑agents leverage an LLM stack (Vertex AI/Gemini on the left, a generic LLM on the right) and interface with internal APIs via MCP, all protected across organizational boundaries by the privacy‑preserving CCVM layer.

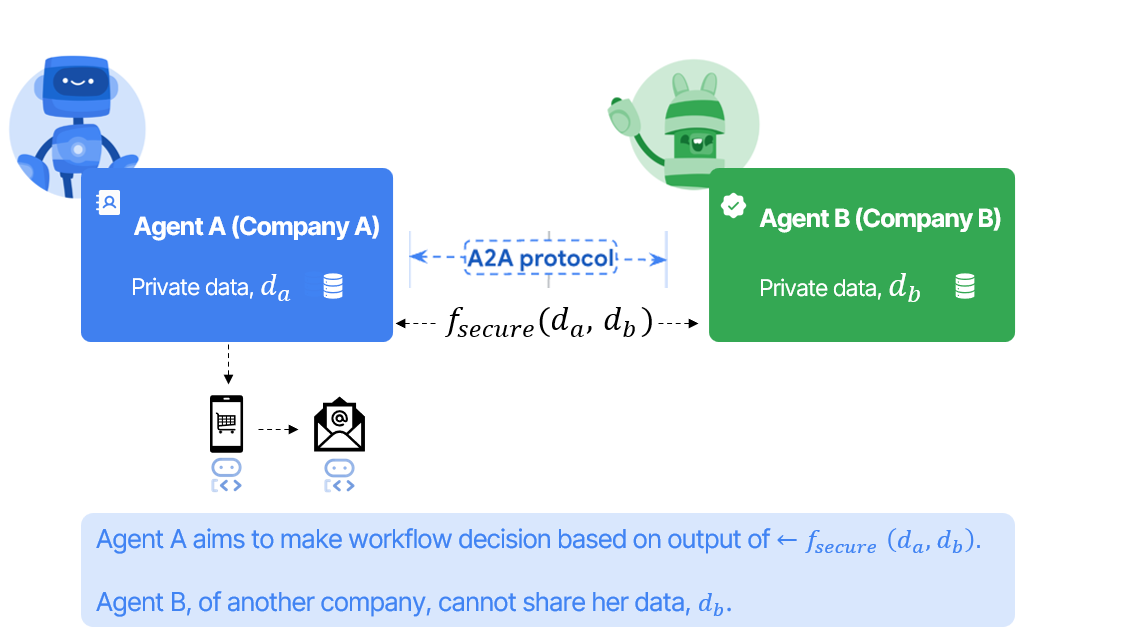

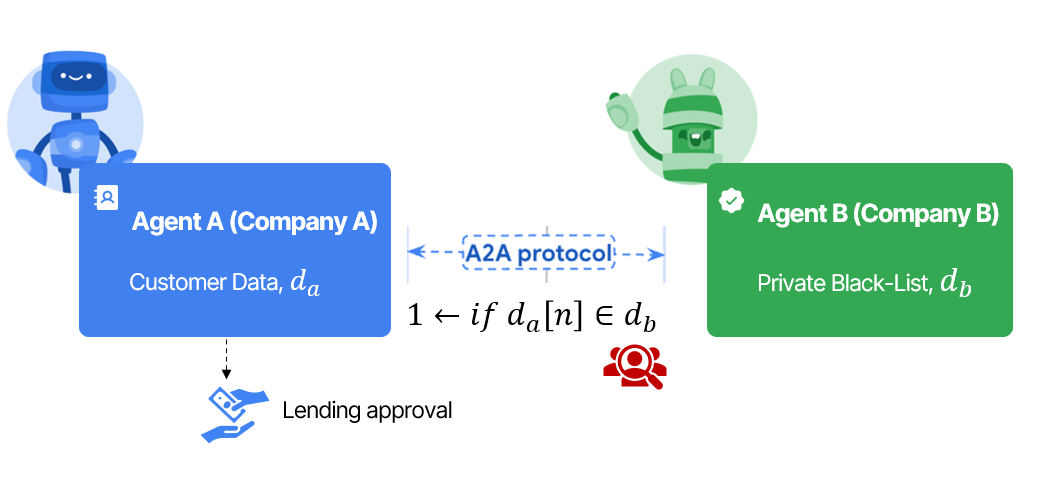

The above graphic illustrates a **privacy‑preserving agent‑to‑agent workflow between two companies**. On the left, *Agent A* (blue box) belongs to Company A and holds its own sensitive dataset $d_a$. On the right, *Agent B* (green box) belongs to Company B and guards a different private dataset $d_b$. Neither firm is willing—or legally allowed—to reveal its raw data outside its boundary.

Instead of sharing data, the two agents connect over the **A2A protocol** (dashed arrow in the center). A2A provides a vendor‑neutral “messaging lane” so autonomous agents can exchange requests, intermediate states, and results while respecting each organization’s authentication and access controls. Crucially, on top of that transport, the agents invoke a **secure computation $f_{\text{secure}}(d_a,d_b)$**. This is a multiparty‑computation (MPC) routine that lets them jointly compute whatever metric or decision they need—credit‑risk score, policy match, fraud signal—without exposing underlying records. Each side only sees the final output relevant to its workflow; all intermediate data stay encrypted or secret‑shared.

After the secure function returns, Agent A can feed the privacy‑preserving result into its own downstream tooling (shown as icons for a mobile app, email, and other local agents). Agent B has not revealed $d_b$ at any point, yet the collaboration still yields the cross‑enterprise insight Agent A requires. The bottom caption summarizes the trust model: *Agent A wants a decision that depends on both datasets, but Agent B’s data must remain private.* By layering MPC atop the A2A protocol, both companies achieve automated cooperation **without compromising data custody or regulatory compliance**—the defining goal of “Private A2A.”

## E. Popular, $f_{secure}$, functions in A2A-CCVM

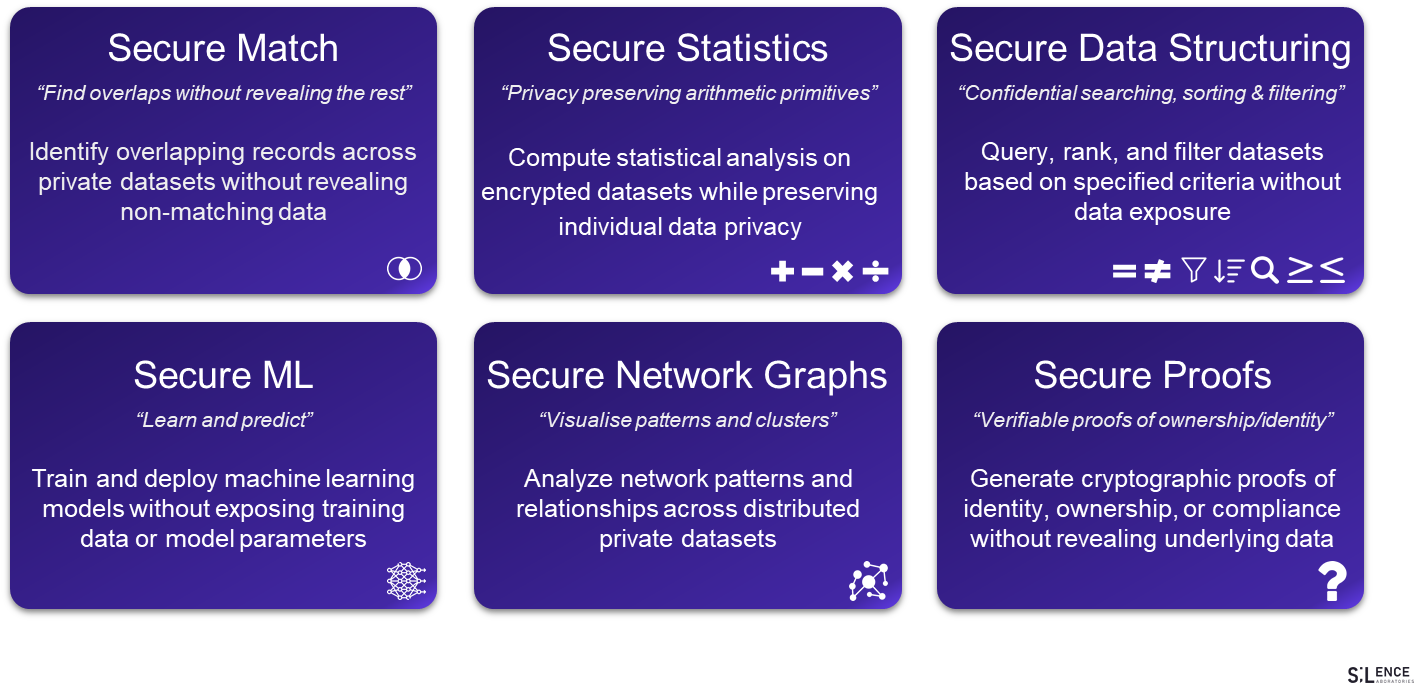

In the A2A setting, the secure function f_secure is a blank canvas: it can be any mathematically expressible computation the two agents need, from a simple Boolean check to a full machine-learning inference. This means the same privacy-preserving rail can power very different business flows.

In finance, it might be a joint credit-risk score or a private set-intersection that flags overlapping fraud patterns between two banks. In supply-chain, it could be a linear-program optimization that balances a supplier's production schedule against a retailer's real-time demand without exposing either side's costs or sales. In automated hiring, it may reduce to a secure "background-check pass/fail" on encrypted résumé data against a sanctions database. In regulatory reporting, it can become a zero-knowledge proof that a bank's capital ratios exceed legal thresholds while the regulator never sees the underlying balance sheet.

Because MPC treats every value as an encrypted share, the same A2A transport can host any of these functions by simply swapping in the required $f_{\text{secure}}$, letting agents collaborate across industries while their organizations keep proprietary data and user secrets fully private.

## F. Use Cases in Design, Deployment and Target

The versatility of secure multi-party computation with A2A-CCVM unlocks privacy-preserving collaboration for a broad spectrum of agentic workflows. Below, we detail major cross-industry use cases—presented in a parallel, digestible format—highlighting the privacy challenges, agent collaboration scenarios, and business impact.

### F.1 Agentic Data Enrichment: Private Search, Match and Retrieval (PSI and PIR)

Imagine Company A's agent A has a private list and company B's Agent has access to much richer and bigger, private list. Using this function, Agent A will be able to collaborate on insights without exposing set members and raw data sets, do private queries without revealing about her intent. A2A-CCVM enables two powerful functions under 2-party SMPC: private-set intersection (PSI) and private information retrieval (PIR) for such cases.

There are multiple such scenarios where agent A would like to enrich her list with that with agent B particular in adtech, marktech, fintech use cases like lending, risk analysis, anti-fraud, sanction list checks etc. Inventory matching is also a good case for enterprise agents which can be enabled through similar construct.

**Demo:** A fintech agent checking customer identity against a credit bureau's database without revealing the customer list or accessing the full credit database. https://github.com/silence-laboratories/A2A-PSI.

**Use Cases:**

- Financial Agents enriching data with brokers' agents for customer segmentation,

- Anti‑fraud agents privately cross‑check transactions against a company’s confidential blacklist or sanction list monitoring agents,

- Lending agents filtering top targets with banking agents for low risk profiles across financial transaction histories of banking data, without accessing raw transaction data,

- Intent based secure matches: Agents building rich intents from user's interactions and cross-collaborating with other discovery agents in private settings.

:::success

:closed_lock_with_key: **Guarantees**

- Agent A learns nothing about $d_b$, except what she searched for,

- Agent B cannot cheat or tamper with secure search,

- Agent B does not learn what Agent A searched for.

:::

**Our joint Targets/Partners (Silence + Google A2A)** can be some of the following companies:

:::info

**A. Intuit <img src="https://md.silencelaboratories.com/uploads/9354e4b7-ae82-4192-a9a5-4b0c04fe5223.png"

alt="diagram"

width="10%">:** An Intuit‑hosted agent (sitting across QuickBooks, TurboTax, Mailchimp, Credit Karma) can invoke Secure A2A with PSI/PIR to prove revenue to a lender, cross‑check a taxpayer’s figures against encrypted IRS data, or match Mailchimp audiences with a partner—all while each counter‑party sees only the “yes/no” or aggregate result and Intuit never exposes raw ledgers, emails, or credit files.

**B. Cohere <img src="https://md.silencelaboratories.com/uploads/8fb0a509-7ed0-4371-adc8-7f8ef5288d73.png"

alt="diagram"

width="10%">:** For Cohere AI, Secure A2A with PSI/PIR would let its language‑model agents collaborate on customer data that Cohere itself is never allowed to see. Imagine two firms—an e‑commerce brand and a payments provider—each keeps its own text logs and embedding stores. Through A2A their Cohere‑powered agents could run a private‑set‑intersection over embeddings to spot overlapping fraud signals or high‑value customer segments; only a match score is revealed, never the raw vectors or messages. Likewise, a Cohere retrieval‑augmented‑generation (RAG) agent could issue PIR queries into a partner’s knowledge base, fetching the precise passages needed for a response while leaving the rest of the corpus opaque. This unlocks joint use cases—cross‑company intent classification, federated content moderation, secure multi‑tenant RAG chatbots—without violating NDAs, data‑residency rules, or LLM prompt‑leak concerns, and positions Cohere as a trusted model layer inside privacy‑preserving agent ecosystems.

:::

####

### F.2 Agentic Private Evaluation: Procurement and Supply Chain

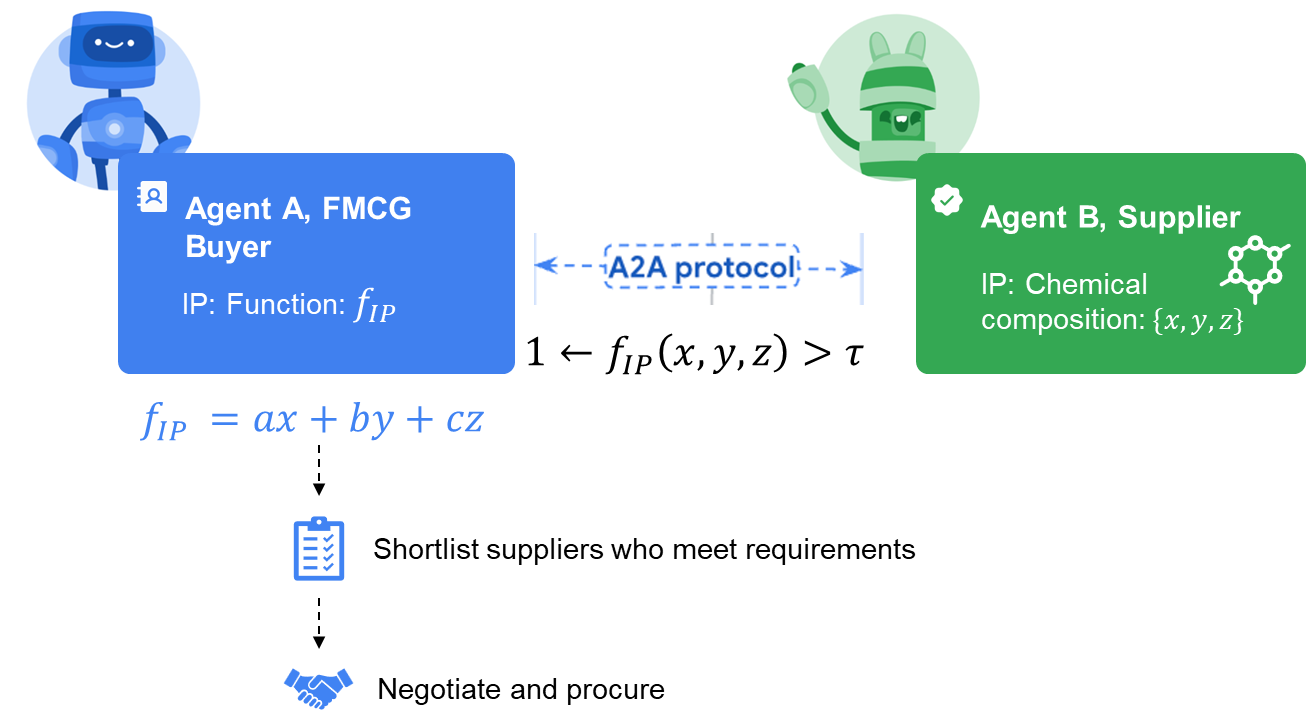

Imagine a procurement workflow for specialty chemicals. The **buyer’s agent** (e.g., perfume or FMCG maufacturer or a manufacturer of coatings) owns a proprietary “fit‑and‑cost” formula—its IP function. This function takes a supplier’s chemical composition $\{x,y,z\}$ and returns a single score telling the buyer whether the mixture meets performance specs at target cost. On the other side, the **supplier’s agent** guards the exact ratios of $x,y,z$ as a trade secret.

Instead of exchanging spreadsheets or NDAs, the two agents link over the A2A protocol and invoke a **secure two‑party computation**. The supplier secretly injects its composition; the buyer secretly provides the coefficients of its fit‑and‑cost function; and the encrypted joint computation returns only a thresholded result—“score ≥ τ” (pass) or “score < τ” (fail). The buyer sees whether the formula is acceptable for procurement *without ever learning the precise recipe*, while the supplier gains proof that the buyer’s proprietary scoring was applied but never learns the scoring weights themselves.

From there, the agents can continue automated negotiation: if the current batch fails, the supplier’s agent can adjust proportions or suggest additives, rerun the secure check, and iterate until the pass signal is returned—all without either side revealing its IP. The outcome is a privacy‑preserving, agent‑driven sourcing process that de‑risks trade‑secret leakage and speeds time‑to‑contract.

:::success

:closed_lock_with_key: **Guarantees**

* **Input privacy (Company B)** – Agent A learns nothing about chemical composition $x, y, z$ beyond the single bit/result it is entitled to receive (e.g., “pass/fail” or the exact score).

* **Input privacy (Company A)** – Agent B learns nothing about the coefficients or logic of $f_{\text{IP}}$; it sees only the final outcome.

* **Computation integrity** – Neither agent can alter, shortcut, or bias the secure collaboration (MPC) protocol; tampering would be detected and the computation aborts.

* **Output correctness** – Both agents are cryptographically assured that the returned result is exactly what $f_{\text{IP}}$ computes on the true inputs, no matter who initiates the call.

* **No side‑channel leakage** – Message transcripts, timing, and intermediate states reveal no extra information about individual IPs.

* **Auditability** – A verifiable transcript (or zero‑knowledge proof) can be logged to show the function was executed once, on agreed inputs, without exposing those inputs.

:::

**Our joint Targets/Partners (Silence + Google A2A)** can be some of the following companies:

:::info

**A. SAP and Oracle** <img src="https://md.silencelaboratories.com/uploads/2077ee7f-af5c-4fe2-a6e1-2605b7850bc2.png"

alt="diagram"

width="7%"> <img src="https://md.silencelaboratories.com/uploads/29ccb65b-90e0-46d4-96b2-0fa1ed196e4d.png"

alt="diagram"

width="10%">: In pharma, high end FMCG and specialty‑chemicals sourcing, a buyer often needs to test a supplier’s confidential formulation against its own proprietary “fit‑cost‑risk” model before any data can legally leave either side. SAP’s S/4HANA and Oracle Fusion ERP already control the purchasing workflows—BOM specs, supplier quality scores, price breaks—so embedding a Secure A2A capsule inside these platforms lets their native agents run that evaluation without exposing IP.

The supplier’s agent posts secret‑shared ratios of a new compound; the buyer’s agent supplies encrypted coefficients of its evaluation formula. No raw recipe leaves the supplier, no proprietary scoring logic leaves the buyer, yet procurement can auto‑negotiate spec tweaks or pricing in real time. Because both ERPs already enforce role‑based security, audit trails, and partner APIs, they are natural anchors for this privacy‑preserving evaluation loop—turning weeks of NDA‑bound lab exchanges into seconds of secure agent dialogue.

:::

**Business impact**

* **Faster sourcing cycles** – agents validate specs in seconds instead of weeks of NDAs and manual data rooms.

* **IP and compliance protection** – proprietary recipes, cost models and pricing rules never leave their owner’s boundary.

* **Multi‑tier resilience** – downstream tiers (sub‑suppliers) can participate via chained Secure A2A calls, letting the Tier‑1 supplier aggregate feasibility without revealing sub‑tier secrets to the OEM.

By embedding the **A2A-CCVM** based secure A2A collaboraton, into SAP and Oracle ecosystems, procurement teams would gain fully automated, privacy‑preserving evaluations—turning traditional RFQs into real‑time, secure agent negotiations.

### F.3 Agentic Private Trading

Agentic trading where agents of traders submitting their orders is around the corner with A2A under the hood for order matching and secure execution. Such collaborative trading will will become ubiquitous if trading strategies can be protecting for both seller and buyer agents.

In a **Private Dark Pool** A2A scenario, autonomous trading agents from multiple institutions securely collaborate to match large orders without revealing sensitive data. Using Google’s Agent-to-Agent protocol alongside **Multi-Party Computation (MPC)** and a **CCVM**, these agents negotiate trades while keeping prices and volumes encrypted. The result is a *zero-trust* trading environment where only the final match is disclosed – enabling trustless, efficient block trades with full privacy and integrity.

:::success

**Guarantees:**

* **Data Privacy:** Order details remain encrypted end-to-end (no leakage to any party).

* **Fair Matching:** No front-running or bias – trades execute only when terms align, with cryptographic proof.

* **Zero-Trust Security:** Not even the dark pool operator can access raw order data, eliminating insider threats.

:::

**Our joint Targets/Partners (Silence + Google A2A)** can be some of the following companies:

:::info

* **Financial Institutions:** Investment banks, exchanges, and brokerages operating dark pools or private trading venues.

* **Institutional Traders:** Hedge funds, asset managers, and large investors needing confidential block trade execution (e.g. equities, bonds, crypto OTC).

:::

**Business Impact:**

* **Better Execution:** Enables large trades without moving the market, reducing slippage and improving prices.

* **Client Trust & Volume:** Offers unparalleled confidentiality, attracting more participants and trading volume to the platform.

* **Regulatory Confidence:** Minimizes data misuse risks and builds compliance-ready audit trails, differentiating the service in a heavily regulated industry.

### F.4 Cryptographic Consent

As agent-based workflows handle increasingly sensitive data, ensuring robust data authorization and proving agent identity become essential for privacy and compliance. Conventional authorization methods struggle to keep pace with the scale and dynamism of A2A collaborations.

By adopting mutual TLS (mTLS) across agent communications, each agent can strongly authenticate its counterpart, securing transport channels end-to-end. To further enhance trust and decentralize control, agent identities and data authorization can be managed through distributed key management at the client side. This enables cryptographic credentials (private keys or certificates) to remain under each participant's sole control, never centralized, reducing the risk of large-scale compromise.

For higher assurance, authorization decisions can require a quorum of approvals, formalized via distributed signature schemes (such as threshold signatures or multi-signature protocols). In this model, actions or data disclosures only proceed if a sufficient subset of authorized parties cryptographically consent, ensuring policy compliance even in adversarial or semi-trusted environments.

**Benefits:**

* Strengthens security by removing single points of failure in key management

* Enables granular, programmable policies for consent and authorization

* Provides verifiable, tamper-evident audit trails for regulatory and legal compliance

* Prevents unauthorized agents from acting unilaterally, enabling truly collaborative trust

## G. R&D Pipeline

On top of the supported features, our R&D pipeline includes building and launch of:

- Research:

- Compilers for converting arbitrary logic by agents into sequences of privacy preserving inference and collaboration,

- Cryptographic coupling of consent and collaborative inference

- Optimization of performance of supported functions and increasing dictionary of supported functions.

- Engineering:

- Enabling agentic network and cascaded workflows collaborations

- Standalone agentic platforms to design and deploy workflows.

## H. Strategic Partnership Framework

### H.1 Google Cloud Platform Integration Opportunities

**Technical Integration**: A2A-CCVM can be designed to integrate with Google Cloud infrastructure:

- Compatibility with Google's Agent Development Kit (ADK) and Agent Engine

- Integration with Vertex AI for ML workloads requiring privacy

- Deployment options using Google's containerized infrastructure

- Monitoring through Google Cloud Operations suite

**Confidential Computing Compatibility**: A2A-CCVM operations could leverage Google's Confidential Space:

- Execution within Trusted Execution Environments (TEEs) for additional security

- Hardware-level attestation capabilities where required

- Enhanced security posture for zero-trust environments

### H.2 Partner Ecosystem Enhancement

**Enabling Regulated Industry Use Cases**: Privacy-preserving capabilities could enable A2A partners to address new markets:

- SAP: Privacy-preserving integrations for regulated ERP workflows

- Salesforce: Compliant cross-organization customer data analysis

- ServiceNow: Secure workflow orchestration across enterprise boundaries

**Technical Synergies**: A2A-CCVM complements existing partner capabilities without requiring fundamental changes to their agent implementations.

### H.3 Compliance and Security Framework

**Technical Foundation for Regulatory Requirements**: A2A-CCVM's cryptographic architecture is designed with privacy-by-design principles that may support compliance efforts for:

- GDPR privacy protection requirements

- HIPAA technical safeguards for healthcare data

- Financial services data protection standards

- Emerging AI governance frameworks

*Note: Regulatory compliance assessment would require legal review of specific implementations and use cases.*

**Audit-Ready Infrastructure**: A2A-CCVM includes technical features that support compliance workflows:

- Cryptographic proofs of computation integrity

- Configurable consent mechanisms for data usage policies

- Comprehensive audit trails for regulatory review

- Zero-knowledge verification capabilities

## I. Value for Ecosystem Partners

**For Google A2A Ecosystem:**

* Removes the primary adoption barrier (privacy concerns)

* Enables new classes of sensitive data workflows

* Differentiates A2A from competitors with built-in privacy

* Accelerates enterprise cloud migration

**For Enterprise Partners:**

- Unlocks revenue from secure data collaboration

- Reduces compliance risk while expanding partnerships

- Accesses proven cryptographic technology without R&D investment

---

> *Please reach out to info@silencelaboratories.com for more details and discussion.*